👋 Hi, I’m Andre and welcome to my newsletter Data Driven VC which is all about becoming a better investor with Data & AI. ICYMI, check out some of our most read episodes:

Brought to you by Coresignal - 1B+ Web Records in One Place

Smart investors and entrepreneurs power their ventures with fresh data signals.

Use multi-source company, employee, and jobs records to develop AI platforms, fill your investment pipeline, or enrich your sales funnel.

Your exclusive 15% discount is waiting for you until the 10th of December. Visit Coresignal's self-service and use the code DATADRIVEN15 at checkout.*

* The discount applies to first-time clients on their initial purchase of either a monthly or yearly plan.

Welcome back to another Data Driven VC “Insights” episode where we cut through noise, scrutinize the latest research, and translate complex findings into practical, evidence-based takeaways for investors, founders, and operators.

Over the past months, reports from MIT, McKinsey, EY and others have flooded our feeds with bold claims about AI adoption. 88% of employees say they use AI, yet only 5% report any meaningful lift in productivity (EY, 2025). That gap is staggering - and telling. It suggests that while AI has become ubiquitous, impact has not.

Most teams are experimenting; very few are extracting real value.

The danger? When decisions are guided by hype rather than measurement, AI becomes a shiny distraction instead of a strategic advantage. Without a rigorous understanding of what actually moves the needle, companies risk pouring time and capital into tools that don’t deliver — or worse, that slow them down.

In this episode, we break down the signal from the noise. We go beyond adoption rates to examine what truly differentiates the top 5% of high-impact AI users:

What are they doing differently?

Is the secret in which tools they use, where they apply AI, or how they integrate it into workflows?

Let’s unpack the data, the patterns, and the playbooks that separate real productivity gains from wishful thinking.

✅ TL;DR (5 Key Takeaways)

Early genAI hype overstated broad productivity gains; while adoption is now widespread, measurable business impact is still uneven and concentrated in specific workflows rather than across entire organizations.

AI currently delivers the highest ROI in high-volume, low-variation tasks with clear metrics, especially routine software engineering, customer support triage, document processing, and repetitive marketing content, often generating 20-50% productivity improvements when paired with human oversight.

AI still broadly falls short in high-judgment and risk-sensitive domains (strategy, legal, compliance) due to hallucination risk, quality demands, and liability exposure, making full automation unrealistic and reducing net gains.

The difference between success and failure depends on execution, not which tools: organizations that track detailed metrics, redesign workflows, and apply governance with human-in-the-loop oversight see sustainable results.

Practical approach: start with narrow pilots in repetitive workflows, benchmark performance before rollout, enforce review for high-stakes tasks, and repurpose saved time toward higher-leverage work rather than assuming headcount replacement.

The Hype We Fell For?

The early 2020s saw a tidal wave of optimism around generative AI. Early academic studies, for example a 2023 randomized experiment by MIT researchers, reported substantial productivity boosts: writing tasks saw approximately 40% speed gains when augmented by AI tools (Noy & Zhang, 2023). The implication felt simple: if writing could be sped up by 40%, why not all knowledge work?

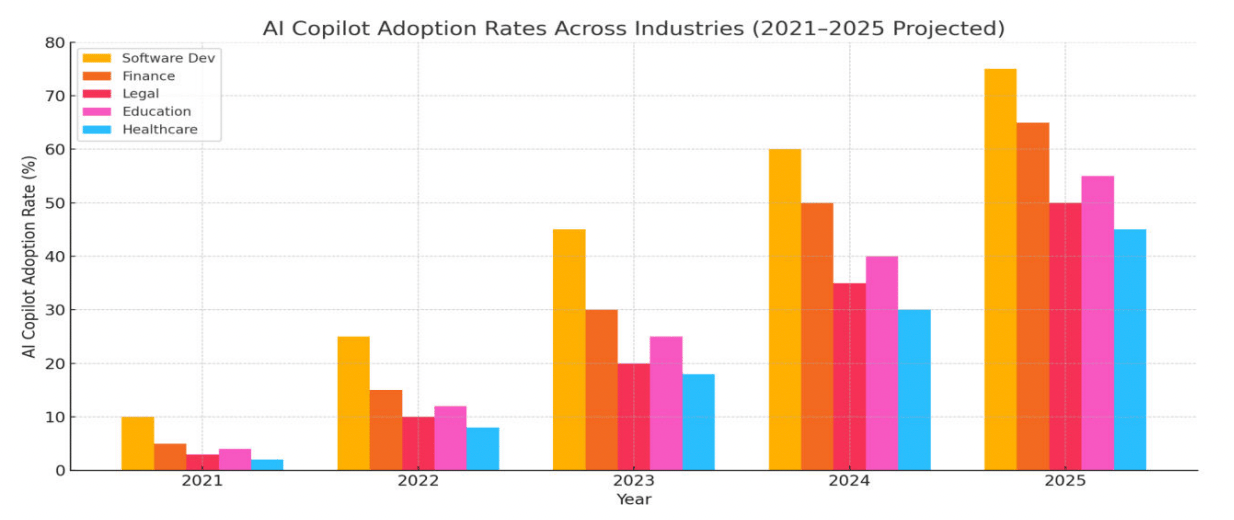

Vendors and analysts amplified that message. Consulting firms and tech industry analysts projected that AI would soon automate massive swathes of office work. At the same time, mass-market attention and innovation/exploration budgets surged: many firms, from startups to enterprises, began integrating AI chatbots, copilots, and automation tools into everyday work (Gupta, 2025).

This hype led many founders, operators, and execs to deploy AI across departments, expecting uniform gains in output or efficiency. But applying task-level breakthroughs (like faster writing) to entire organizations ignored a hard truth: most jobs consist of unknown unknowns. It’s a mix of intangible knowledge and many different tasks that make it difficult to automate.

As more companies tried to go “all in,” evidence began to diverge from the hype. Surveys now show that while AI tools are nearly ubiquitous, measurable business impact remains the exception rather than the rule (McKinsey, 2025 a). That divide between adoption and impact is now reshaping how the most disciplined teams approach AI.

✈️ KEY INSIGHTS

Generative AI adoption surged on a wave of early optimism and bold productivity claims, but broad organizational impact has largely fallen short. While new AI tools are popping up every minute, measurable gains remain limited because only a fraction of real-world work is easy to automate.

Where AI Actually Works: 4 High-ROI Use Cases to Start With

Based on various studies, dozens of our own interviews with enterprises and SMBs, and vendor data, a clear pattern emerges: AI delivers the most value in high-volume, low-variation tasks with clear success metrics. These are the “low-hanging fruit”, ideal for pilots with measurable impact.

1) Engineering & Developer Work: Routine Code, Boilerplate, Refactoring

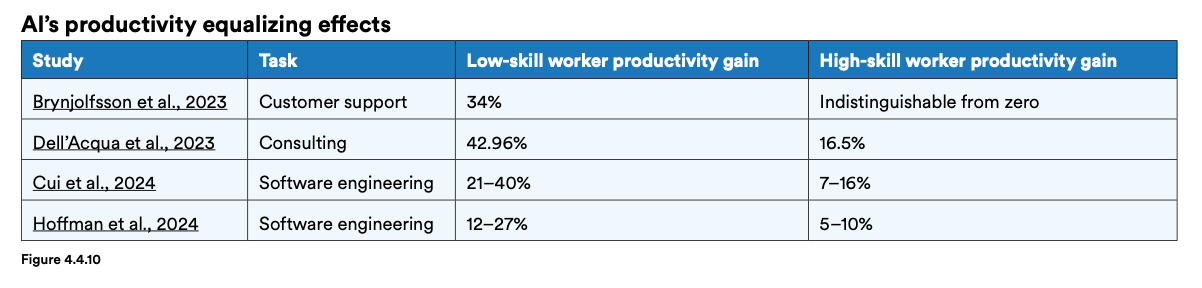

In software development, recent large-scale studies show that AI tools meaningfully accelerate routine coding tasks. One field experiment with 4,867 developers reported a 26% average increase in task completion with AI assistance, and a complementary natural experiment with 187,489 developers found a 12.4% rise in core coding activity, alongside reduced time spent on project management. Additional research indicates that junior developers gain 21-40% in productivity, while senior developers see more modest 7-16% improvements, suggesting that AI particularly enhances lower-skill, repetitive work (Stanford University HAI, 2025; AI Engineer, 2025).

What to track: PR cycle time, lines of code reviewed per engineer per week, bug regression rate, velocity of refactors, and test coverage growth

2) Customer Support & Triage: Ticket Triage, FAQ, First-pass Responses

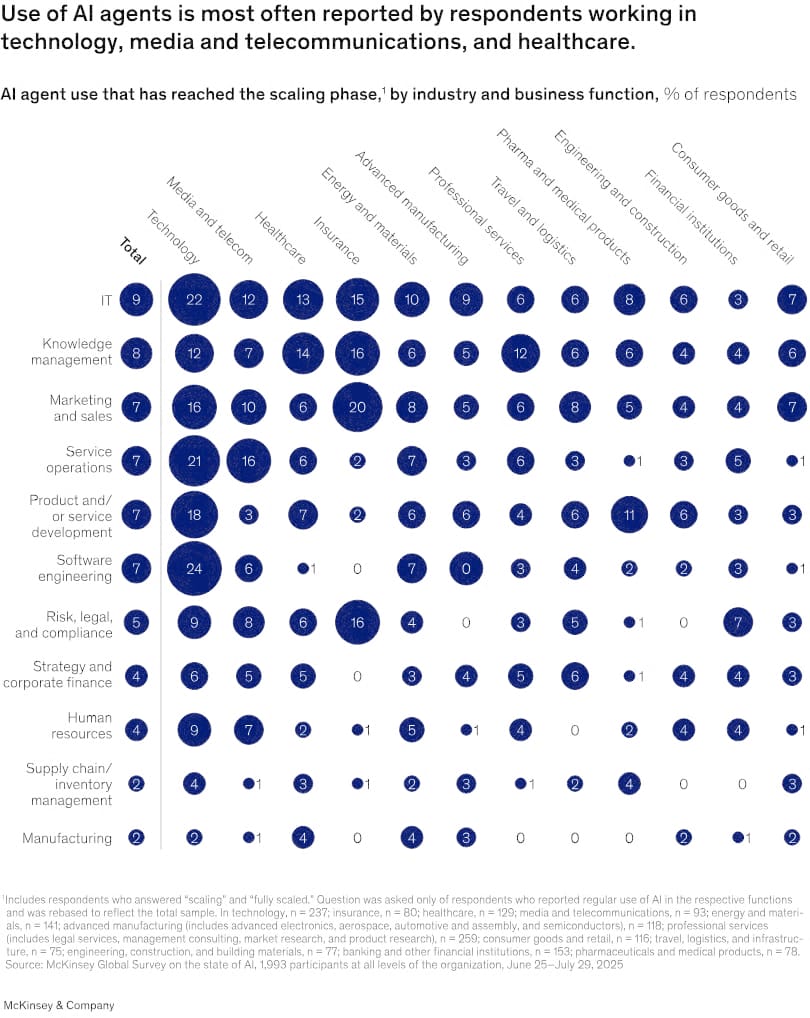

Customer support has become a prominent early use case for AI, as more companies integrate AI agents and copilots into service workflows. McKinsey’s “State of AI 2025” report shows that a large majority of organizations now deploy AI in at least one business function, with service-oriented areas (such as IT, customer operations, and knowledge management) among those reporting early cost and efficiency benefits. These gains typically emerge when companies redesign processes around AI rather than simply layering new tools on top of existing workflows (McKinsey, 2025 b).

Zendesk’s insights on AI-powered customer support confirm this pattern. Their platform documentation shows that chatbots trained on billions of real customer service interactions can deflect a significant portion of repetitive tickets (password resets, FAQ lookups, status checks), allowing human agents to focus on complex or escalated cases. The ticket deflection strategy enables immediate self-service for customers while freeing agents to handle high-value interactions (Zendesk, 2025).

What to track: deflection rate, first-contact resolution rate, cost per ticket, customer satisfaction (CSAT), agent headcount per ticket volume

3) Document Processing & Admin Work: Summaries, Invoices, Contracts

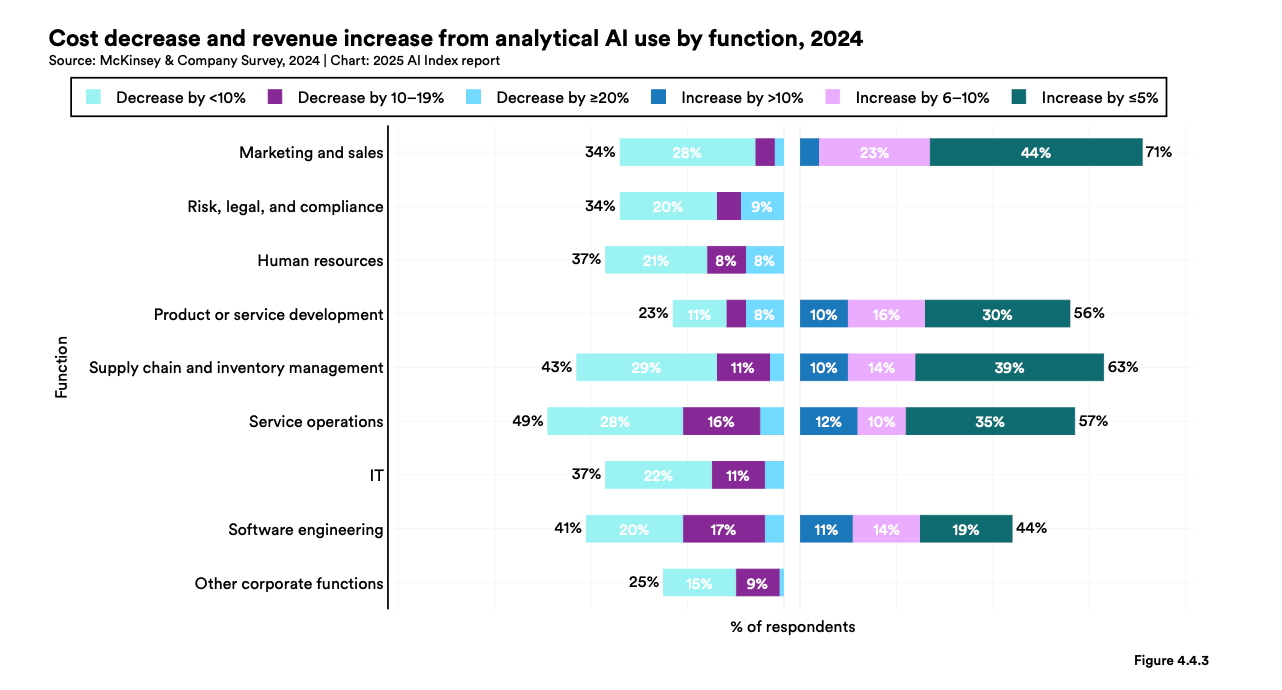

Document workflows, such as summarizing meeting notes, extracting data from invoices, or routing contracts, are among the top areas where AI delivers cost savings. According to a survey mentioned in Stanford HAI’s AI Index Report, 49% of organizations using AI in service operations reported cost reductions, with similar benefits reported by 43% for supply chain and inventory management. Revenue gains from the use of AI are also concentrated here: 57% cited increased revenue in service operations and 63% in supply chain management. These findings show that AI-driven document processing is especially impactful in high-volume, standardized operational functions (Stanford University HAI, 2025).

BCG’s research on AI adoption corroborates this. Companies that deploy AI in everyday tasks, including document processing and administrative automation, realize 10% to 20% productivity gains. When organizations reshape critical functions to incorporate AI, efficiency enhancements can reach 30% to 50% (BCG, 2025; TechEdgeAI, 2025).

What to track: time per document, error rate, throughput per hour, number of processed documents per FTE

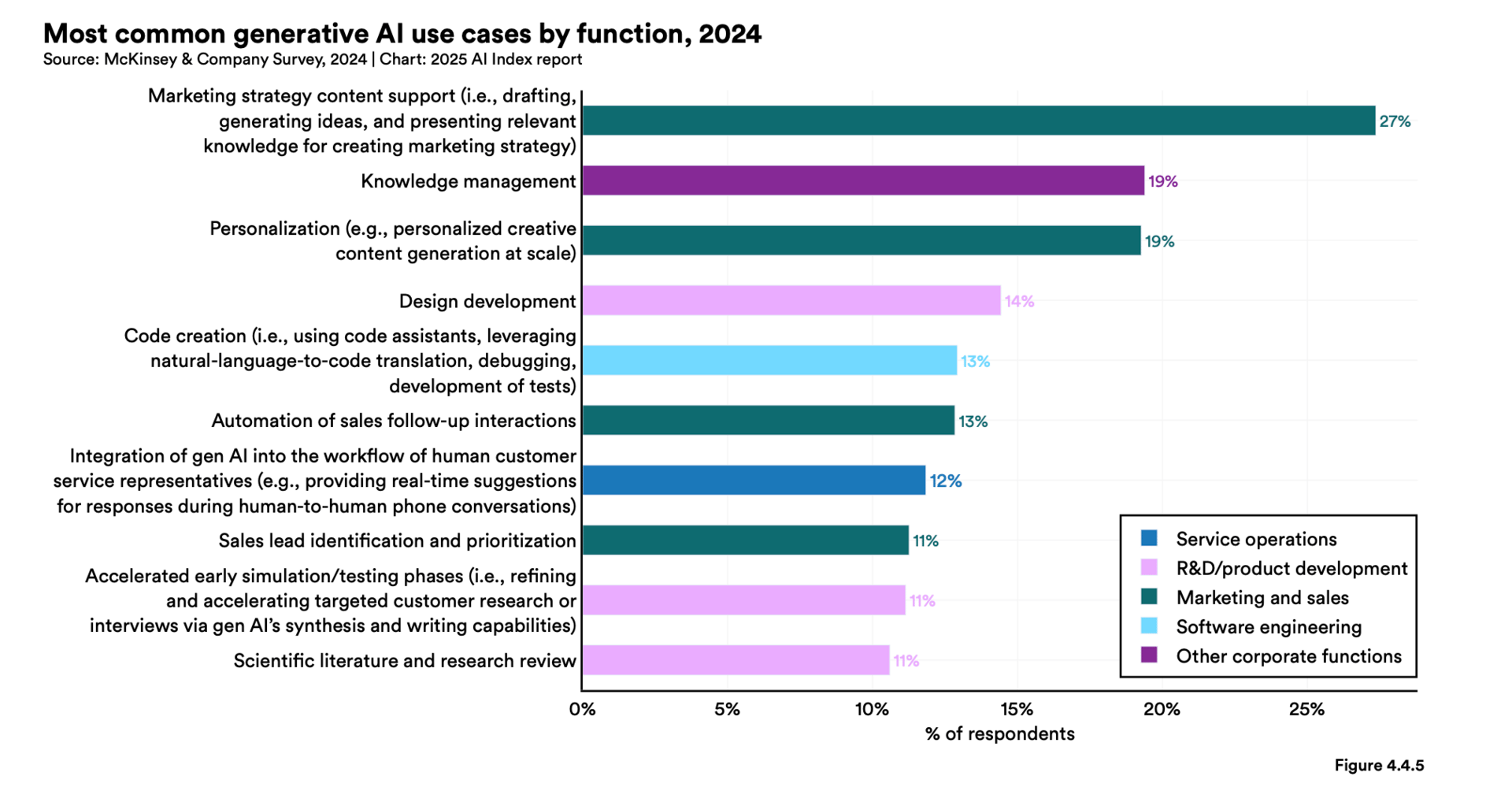

4) Marketing / Content Workflows: Drafts, Variants, Data Enrichment

Marketing teams also report selective wins, particularly in repetitive content workflows: A/B ad copy testing, subject-line generation, SEO title and description creation, and bulk outreach messaging. Research indicates that when AI is used for first-draft generation followed by human refinement, teams see measurable efficiency gains. A recent study of bloggers using AI reported 30% reduction in content generation time, though the actual final output requires editorial oversight (DGT, 2023).

A broader analysis of startup AI adoption shows that companies earn an average $3.70 for every dollar invested in generative AI technologies, with content creation ROI driven by 30% reduction in content production time and 40% increase in creative output volume. However, this assumes proper human review and editing. Purely AI-generated copy rarely meets brand or tone standards without substantial human involvement (Cubeo AI, 2025).

Based on various experts interviews, majority of these workflows are still text-based automations and have only started to explore image/video generation for creative copy and branding initiatives. But that’s where the big opportunity is ahead! Shameless plug: Check out our Earlybird portfolio company Black Forest Labs who offer Nano Banana Pro quality at a fraction of the cost - working with customers such as Canva, Adobe, Meta, Telekom, etc. and who have just announced their $300m Series B funding yesterday :)

What to track: time per draft, number of variants produced per campaign, conversion lift, CAC change, editing-to-publish ratio

✈️ KEY INSIGHTS

AI delivers the highest ROI in high-volume, low-variation workflows with clear success metrics. Companies see the strongest gains in engineering (routine code, refactoring), customer support (triage, FAQ handling), document processing (invoices, contracts, summaries), and marketing (drafts, variants, A/B copy). These areas show consistent productivity boosts ranging from 20% to over 50% when paired with proper human oversight.

2 Areas Where AI Falls Short

AI is not the magic silver bullet. Several new studies highlight functions where AI either fails to deliver or introduces new risks.

1) Strategic Decision-Making & High-Judgment Work

Harvard Business Review warns that AI is ill-suited to own strategic choices, even if it can surface data and scenarios. The belief that “more data + bigger models = better decisions” is a form of “dataism” that underestimates context, values, and tradeoffs - that today - only humans can weigh (HBR, 2024). That insight hurts for all the data-driven fans here, but is just another argument for augmented setups.

McKinsey’s 2025 “Superagency” report similarly finds that in domains with regulatory nuance, long time horizons, or complex tradeoffs, AI tends to add noise rather than clarity unless tightly governed as a support tool, not a decision-maker (McKinsey, 2025 a).

Hallucination research from Harvard’s Misinformation Review shows why: even strong models generate false but plausible outputs at measurable rates (roughly 1-4% for summarization, up to 29% on specialized professional questions), which is unacceptable for high-stakes strategy decisions (Misinformation Review, 2025).

2) Legal, Compliance, and Security-Critical Work

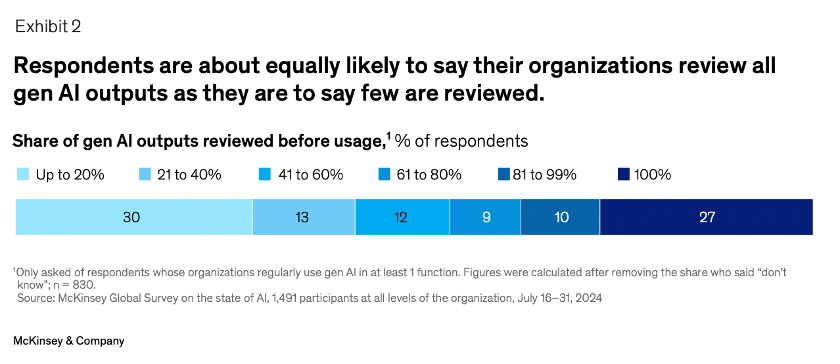

Legal and compliance teams increasingly test AI for contract review and policy checks, but they rarely trust it without full human oversight. Industry analyses highlight that while AI can review standard contracts in seconds and flag issues with high measured accuracy, legal departments still insist on human review because a small error rate carries outsized liability (McKinsey, 2025 b; Virtasant, 2025).

A 2025 State of Contracting survey reports that only about 14% of legal teams actually use AI in live contract review, even though adoption interest is growing rapidly, and nearly all of those maintain mandatory attorney review on outputs. McKinsey’s organizational work shows that when you add governance, audit, and risk-management overhead, the net ROI of AI in high-risk legal / compliance workflows often shrinks or turns marginal (McKinsey, 2025 b; LegalTechTalk, 2025).

✈️ KEY INSIGHTS

Recent research shows that AI delivers limited or even negative value in high-judgment or risk-sensitive functions, because hallucination rates and liability concerns require heavy human oversight. Studies from Harvard, McKinsey, and industry surveys consistently find that in strategy and legal or compliance workflows, AI can assist but cannot reliably replace expert decision-making, which significantly reduces the net productivity gains.

Why Gains Vary: The Three Execution Levers

Based on these patterns, success depends strongly on execution, not on hype. Three critical levers determine whether AI becomes a productivity multiplier or a costly distraction:

Instrumentation & Event Tracking: Without event logging (e.g., ticket volume, PR cycle time, document throughput), real gains remain invisible. The firms delivering sustained improvement almost always paired AI deployment with rigorous data collection and review (McKinsey, 2025 b). We’ve written about this from the perspective of a VC investor in “Transparency in VC: Measure What Matters to Improve Coverage and Performance”

Workflow Redesign and Role Alignment: Simply giving teams AI tools doesn’t guarantee efficiency. Teams that restructured workflows (e.g., rerouting repetitive tasks to AI and repurposing human effort for high-authority tasks) saw durable gains (BCG, 2025). As many of you have learned by now, adoption of new tools and technologies is more change management than anything else.

Human-in-the-loop & Governance: For repetitive tasks, fully automated pipelines work. For any non-trivial or high-stakes work, governance, manual review, and clear escalation policies prevent quality drift and lawsuits (McKinsey, 2025; The Harvard Gazette, 2025). Augmented solutions are the way to go - for VC firms and many other more complex setups.

How to Act On This

If you’re evaluating AI deployment, or investing into companies promising “AI-driven productivity”, here’s a tactical roadmap:

Start with high-volume, low-variation tasks: boilerplate code, triage support tickets, document processing, content drafts. There are where metrics already show ROI.

Instrument first, deploy later: set up logging and capture baseline metrics for work volume, cycle time, error / defect rates before introducing AI.

Pilot narrow & measure impact: run small A/B pilots or holdouts for 4-8 weeks to measure real delta, not just adoption!

Require human review where needed: legal, compliance, strategic decisions should stay human-judged; use AI only for prep or draft work.

Design for role reuse, not headcount reduction: use saved time to shift talent to higher-leverage tasks. That’s where long-term value accrues.

✈️ KEY INSIGHTS

To deploy AI effectively, start with narrow, repetitive workflows where ROI is already proven, measure impact rigorously, and maintain human oversight for high-stakes work. Use productivity gains to reallocate talent to higher-value tasks rather than aiming for headcount reduction.

Thanks to Lea Winkler for her help with this post.

Stay driven,

Andre